As someone who’s involved in building software, it’s only natural for me to follow and experience the trends in the technology industry. There are quite a few opinions about the future of how we build software and its impact on overall IT.

This post was very much inspired by watching NVIDIA CEO Jensen Huang’s Special Address at AI Summit India. (Watch the video in the end of the post).

Let’s begin.

For decades, the world has been riding a wave of rapid technological advancements.

Much of this was thanks to something called Moore’s Law, a prediction that computing power would double roughly every two years, while costs would fall.

This steady evolution has fueled innovation, making technology cheaper, faster, and more accessible.

Businesses around the globe have benefited from this phenomenon, relying on ever-increasing computing capabilities without making substantial changes to their software. The hardware just got better, and everything else kept up.

But today, according to Jensen Huang, this incredible journey of automatic progress approaching the end – we’ve hit the wall.

The scaling of CPUs, the brains behind our computers, has reached its physical limits. Moore’s Law, that free ride we’ve relied on to continuously improve computing power, is no longer delivering at the pace we need.

Without it, we’re faced with a critical question:

How do we keep advancing?

How do we continue building more powerful technology without spiraling into skyrocketing costs?

The answer lies in a complete reinvention of computing!

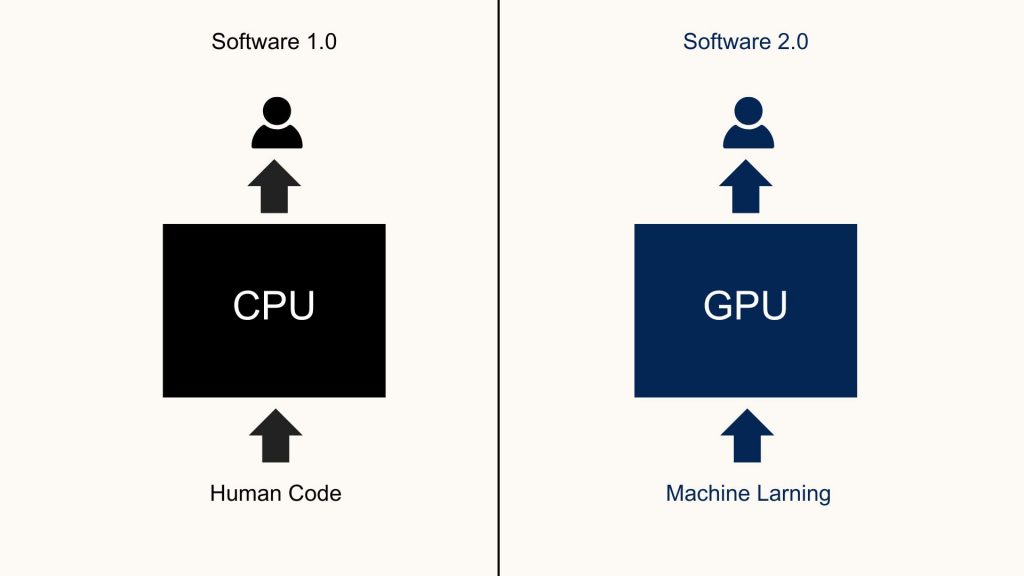

A shift from the way we have always thought about software and hardware – a shift from what Jensen Huang calls “Software 1.0” to “Software 2.0.”

Software 1.0: The Era of General-Purpose Computing

To understand where we’re heading, it’s helpful to look back at where we’ve been.

The era we are now leaving behind, “Software 1.0,” was defined by general-purpose computing.

This idea emerged around 1964, when IBM introduced System/360.

This was a breakthrough: a computer that could run different types of applications thanks to its operating system, and a central processing unit (CPU) that could multitask.

The separation of hardware and software, as well as the compatibility across different generations of computers, allowed companies to make long-term investments in their software without needing to replace it every time they upgraded their hardware.

General-purpose computing became the backbone of the IT industry.

The CPUs at the core of our computers kept getting faster and more efficient, and Moore’s Law allowed us to achieve this without fundamentally changing how we wrote or ran software.

All a company had to do was wait for the next chip upgrade, and suddenly everything would run twice as fast for half the cost.

For almost 60 years, this formula has driven the growth of the software industry, from the early mainframes to personal computers to today’s smartphones.

But as we approach the limits of how small and efficient we can make a CPU, it has become clear that relying on Moore’s Law to magically solve our performance and cost challenges is no longer an option.

To continue innovating, we need a new way to scale. Enter Software 2.0.

Software 2.0: The Age of Accelerated Computing

According to Jensen Huang, “Software 2.0” is all about breaking free from the limitations of general-purpose CPUs by rethinking how we use computing power. Instead of expecting a single chip to do it all, we’re moving towards accelerated computing.

In this model, we still have CPUs, but we offload specific, complex tasks to specialized processors that are designed to handle them more efficiently.

A good example of this shift is NVIDIA’s CUDA, a programming model that allows developers to take tasks that would traditionally run on a CPU and instead run them on a GPU – a Graphics Processing Unit.

GPUs are fundamentally different from CPUs.

While a CPU is built to handle a wide variety of tasks, a GPU is designed to handle thousands of simple tasks simultaneously.

Originally created for rendering graphics, GPUs are perfect for workloads that involve large amounts of data and parallel operations—like training artificial intelligence models, processing vast datasets, or simulating physical systems.

By pairing CPUs with GPUs, we can massively accelerate the kinds of calculations that used to be bottlenecks, allowing us to keep scaling without relying on Moore’s Law.

This shift isn’t just about swapping one chip for another.

It requires a new way of thinking about software. In the traditional model, programmers would write code, compile it, and run it on a CPU.

With accelerated computing, you need to rethink your entire application.

What parts of the workload are best suited to a GPU?

How do you break your code into components that can run in parallel?

This new approach requires rewriting software, reimagining how problems are solved, and often, learning entirely new programming tools.

Implementing Software 2.0. How Do We Get There?

The transition from general-purpose computing to accelerated computing is not a simple one.

It’s a fundamental shift that requires cooperation across the entire technology stack – from hardware manufacturers to software developers to end users.

So, how do we implement Software 2.0?

The first step is to recognize that accelerated computing is not a one-size-fits-all solution.

Unlike the CPUs that powered Computing 1.0, there is no magical processor that can accelerate every task.

If there were, we would simply call it a CPU!

Instead, companies like NVIDIA have created a range of specialized chips and software frameworks for different industries and applications.

There are GPUs for graphics, for AI, for scientific calculations, and more.

Each of these chips comes with its own programming tools, like CUDA, which developers use to create software that makes the most of these accelerators.

The transition also involves creating digital twins – virtual replicas of physical systems – where AI models can be trained and refined before being deployed in the real world.

This requires powerful simulation environments like NVIDIA’s Omniverse, which allows developers to build and test robots, autonomous vehicles, and other complex systems in a virtual space before they hit the factory floor or the street.

Companies looking to adopt Software 2.0 need to start by understanding which parts of their operations could benefit from acceleration.

This could mean using GPUs to train AI models faster, deploying specialized chips to optimize supply chain logistics, or using virtual simulations to improve manufacturing processes.

It’s a significant investment, both in terms of money and time, but it’s the only way to stay competitive in a world where the limits of traditional CPUs are holding us back.

When Will the Switch Happen?

The switch from Software 1.0 to Software 2.0 is already underway, but it won’t happen overnight.

Many companies are still heavily invested in their existing software and hardware, and making the leap to accelerated computing will take time.

We’re likely to see a period of hybrid computing, where traditional CPUs and accelerated GPUs coexist, as companies gradually rewrite and transition their software to take advantage of the new hardware.

This transition is being driven by necessity.

As the costs of maintaining and upgrading general-purpose computing systems continue to rise, and as the performance benefits of each new generation of CPU diminish, companies will be forced to explore alternatives.

The first movers – those companies that adopt accelerated computing early – will have a significant advantage.

Why?

Because they’ll be able to process more data, train AI models faster, and develop new products at a speed that just isn’t possible with traditional computing.

So, while there’s no fixed date for when Computing 2.0 will become the norm, we can already see the writing on the wall.

The companies that embrace this new model will be the ones that define the future of technology.

Concerns About Monopoly? Is NVIDIA Too Powerful?

With any major shift in technology, there are always concerns about who controls the keys to the future.

In the case of so-called, Software 2.0, one of the biggest players is NVIDIA.

The company has built an ecosystem around its GPUs, with its CUDA platform being the de facto standard for accelerated computing.

This has led to concerns that NVIDIA is becoming too powerful – that it could potentially establish a monopoly over the future of computing.

NVIDIA’s dominance means that if you want to take advantage of accelerated computing, you are, in many ways, tied to their hardware and software ecosystem. CUDA, while incredibly powerful, is a proprietary platform.

This raises questions about vendor lock-in, competition, and innovation.

What happens if NVIDIA decides to change its pricing model?

Or if another company comes up with a better technology, but it’s incompatible with the existing NVIDIA ecosystem?

The hope is that competition will continue to drive innovation.

Other companies, like AMD and Intel, are also investing in accelerated computing technologies, and open standards like OpenCL provide alternatives to proprietary solutions like CUDA.

But it’s clear that NVIDIA is currently leading the pack, and it will be up to the rest of the industry to ensure that accelerated computing remains open, competitive, and accessible.

Conclusion – The Future of Software Development

The end of Moore’s Law has brought us to a crossroads.

We can no longer rely on CPUs getting faster and cheaper every year without any changes to our software.

The future lies in accelerated computing – a new model that requires rethinking how we build and run software, investing in specialized hardware, and embracing a fundamentally different approach to solving computational problems.

This shift from Software 1.0 to Software 2.0 won’t be easy.

It requires new skills, new investments, and a willingness to leave behind the comfort of a model that has worked for nearly six decades.

But the rewards are immense. By embracing accelerated computing, we can continue to push the boundaries of what technology can do, unlocking new possibilities in AI, robotics, healthcare, and countless other fields.

The transition is happening now, and those who adapt early will have a significant advantage.

As we move forward, it’s also important to ensure that this new era of computing remains open and competitive, so that innovation can thrive and the benefits of accelerated computing can reach everyone—not just those who can afford to pay a premium.

The end of software development as we know it is here.

Whether we like it or not.

Vladimir